Might a young player like Aaron Judge one day find himself in the record books? (via Arturo Pardavila III)

Do you remember record chases? Will Alex Rodriguez pass Hank Aaron? Or will it be Ken Griffey Jr.? Oh, right, Barry Bonds. How many strikeouts can Randy Johnson get? Can he pass 5,000? Can he challenge Nolan Ryan? And hits and wins and doubles and saves and on and on. Sure, they didn’t generally happen, but we entertained the notion.

Now, quickly, name a player who you think has even a ghost of a chance of breaking a major record. Albert Pujols isn’t going to make it. Neither will Miguel Cabrera. Mike Trout isn’t a compiler in the way he’d need to be. Clayton Kershaw isn’t going to get the innings he needs.

There’s no one. There aren’t even that many candidates to join the 3,000-hit club or the 500-homer club. We’ll have some pitchers get to 3,000 strikeouts, but not much over it.

I was looking at the record books recently and looking at the active players and wondering if maybe we are done seeing record chases. Maybe, I thought, the game has changed enough in the way players are used and evaluated that we aren’t going to see great compilers anymore.

My thinking was that players weren’t being allowed to hang on once they ceased to provide value in an overall sense and that this was probably different than in eras past when, I thought, players might play forever if they could still hit 30 homers. That, paired with the reduction in PEDs, which prolonged careers, I reasoned, may have permanently altered the record-breaking landscape.

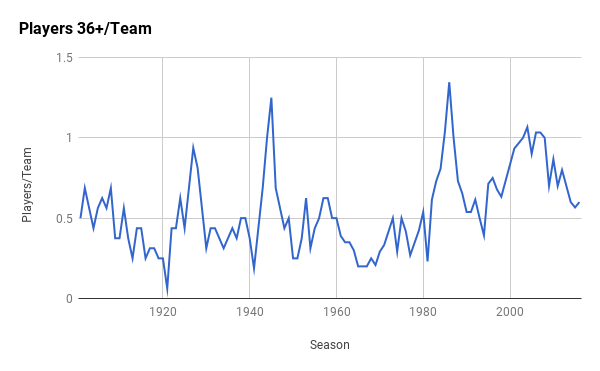

There’s a reasonably simple test for this. I went through baseball history and–starting in 1901–recorded how many players there were on a per-team basis who were at least 36 and got at least 200 plate appearances. Basically, old guys getting regular or semi-regular playing time. I then made a line graph. (I’m an English teacher, so please appreciate how special that is.) Here is said graph:

That’s a weird graph. I thought I would see a general decline in older players with maybe a spike during the PED era. But that’s not what happened at all. There are four notable spikes, late 1920s, mid-’40s, mid-’80s and the (sinister music) early 2000s. The spike in the ’40s, one presumes, is result of World War II, and the most recent spike is the PED era. But the other two had no explanation I can think of.

While I was aware random variation was possible, I went looking for explanations anyway. Demographic trends could explain some of it. Baby Boomers hit their late 30s in the ’80s. After the 1890s, population growth in the U.S. declined dramatically, which could explain why players were able to hang on in the late ’20s: because there wasn’t as much competition. Even the PED era syncs up with the children of Baby Boomers reaching their late 30s.

Which isn’t to say I had faith in this particular explanation. But the appearance of the spikes told me something about compilers. Though there were obvious exceptions, many of baseball’s great compilers finished their careers during the spikes. For instance, five of the 24 pitchers in the 300-win club got win No. 300 between 1982 and 1986. And seven of the 27 members in the 500-home run club got to No. 500 between 2001 and 2007.

Because of this, it mattered whether the spikes were random or had a direct cause. If they were random, we could expect another one (and thus another bunch of big, fancy numbers) at some random moment in the future. If each spike had a direct cause, then we’d need to have something happen in order to get another spike.

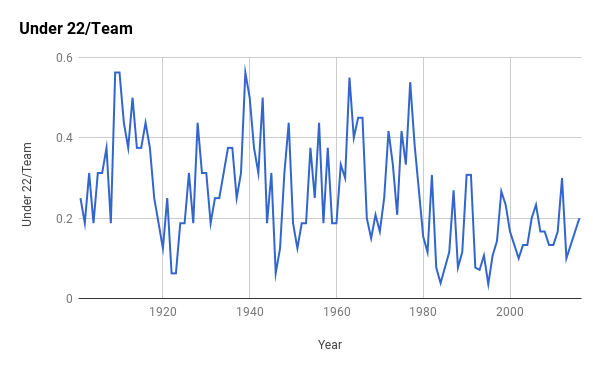

I mulled this for a while and decided the next step was to look for the prevalence of extra-young players. After all, to hit the kinds of numbers I’m looking for, a player needs to play into his late 30s, but he also needs to start pretty young. Here then is a chart showing the number of players per team who qualified for the batting title and were 22 or younger.

This is a bit more sporadic than our first chart, but interestingly, you can see some points that correspond to data in the earlier chart. Note, for instance, that young players crater in the ’20s and ’40s. There are peaks of young players in the 1900s and 1960s that correspond to the old-player peaks that occurred 15-20 years later.

Expansion years matter a lot here, as well. They thin out the talent pool and force young players into the majors earlier. It’s also interesting to note the peak in the late ’30s that didn’t have a corresponding old-player peak 15-20 years later. World War II took a huge toll on MLB (and on the country as a whole) that is apparent in a number of ways that really show up in a granular way as well as the more well-known losses of seasons among some of baseball’s greatest players.

What’s really stands out in this second chart, however, is what happened in the early ’80s when young players were suddenly getting shots only about half as often. What happened, you may already have figured out, is free agency. Teams couldn’t afford to let players grow into roles, so unless they were sure things, they weren’t given the opportunity. And I can tell you from looking that the names you see among the young players for those years tend to be Hall of Famers or players who would have been but for injury or other extenuating circumstance.

And so, I’m back to my original question: Does the current calculus used to evaluate if a player should be in the majors allow for players to reach the milestones we all grew up paying attention to? I don’t know.

Things are certainly more different now than they’ve ever been before. PEDs largely have been filtered out, which reduces the prevalence of older players. Free agency means many have to wait longer to get a shot. Neither of those is good if you’re looking for the next 300-game winner or 500-home run hitter.

However, the young players who do get shots also tend to be fantastic. Teams, seemingly, aren’t afraid to promote players like Mike Trout or Albert Pujols who force the issue, so while the demographics of baseball have narrowed in a general way, they may not have narrowed in the ways that matter for record chases and milestones. I guess I’ll check in with you in 15 or 20 years, and we’ll see what happened.